Proteogenomics 1: Database Creation

| Author(s) |

|

| Reviewers |

|

OverviewQuestions:

Objectives:

How to create a customized Protein Database from RNAseq data?

Requirements:

Generating a customized Protein sequence database with variants, indels, splice junctions and known sequences.

Time estimation: 30 minutesLevel: Intermediate IntermediateSupporting Materials:Published: Nov 20, 2018Last modification: May 8, 2025License: Tutorial Content is licensed under Creative Commons Attribution 4.0 International License. The GTN Framework is licensed under MITpurl PURL: https://gxy.io/GTN:T00231rating Rating: 5.0 (1 recent ratings, 10 all time)version Revision: 29

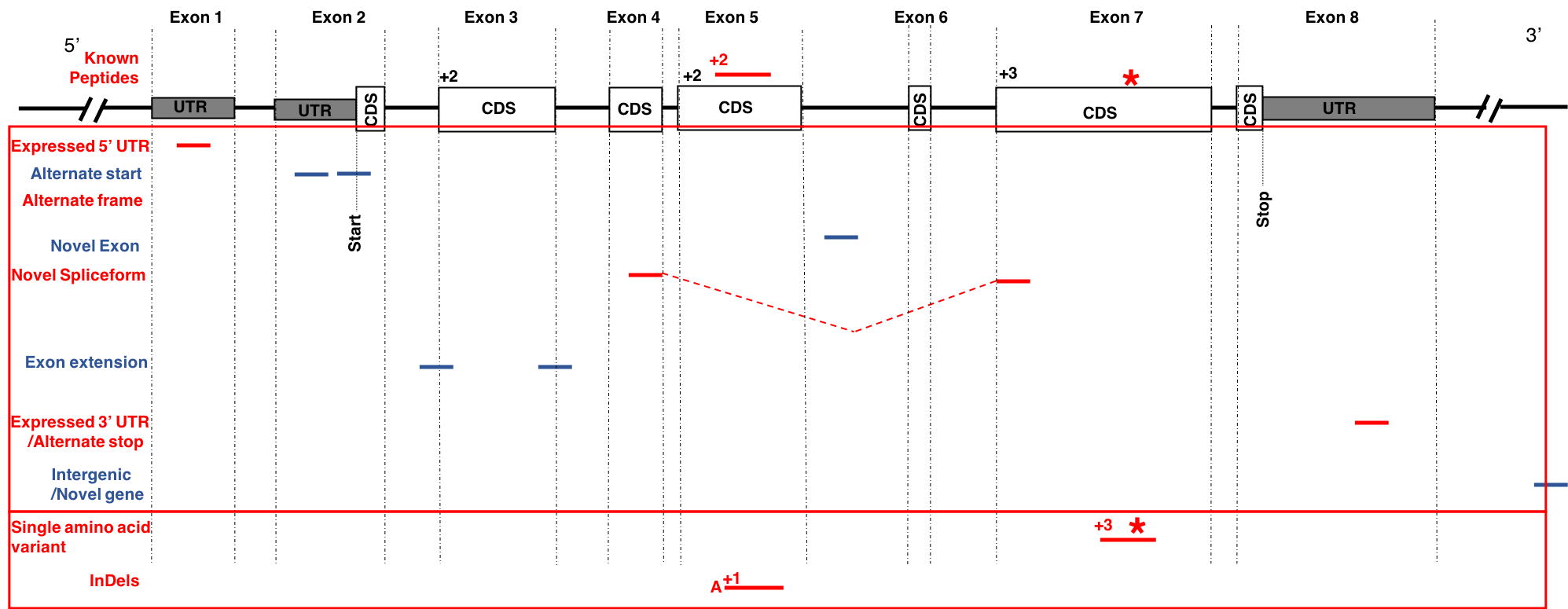

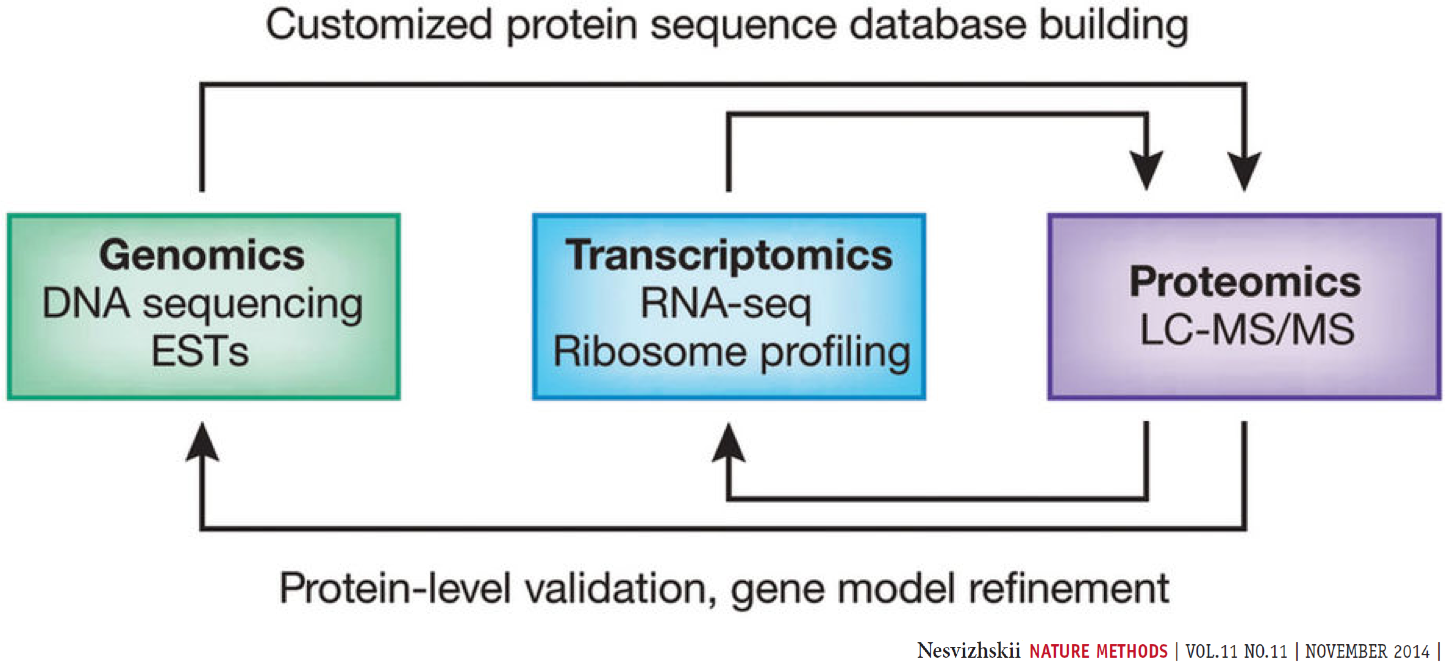

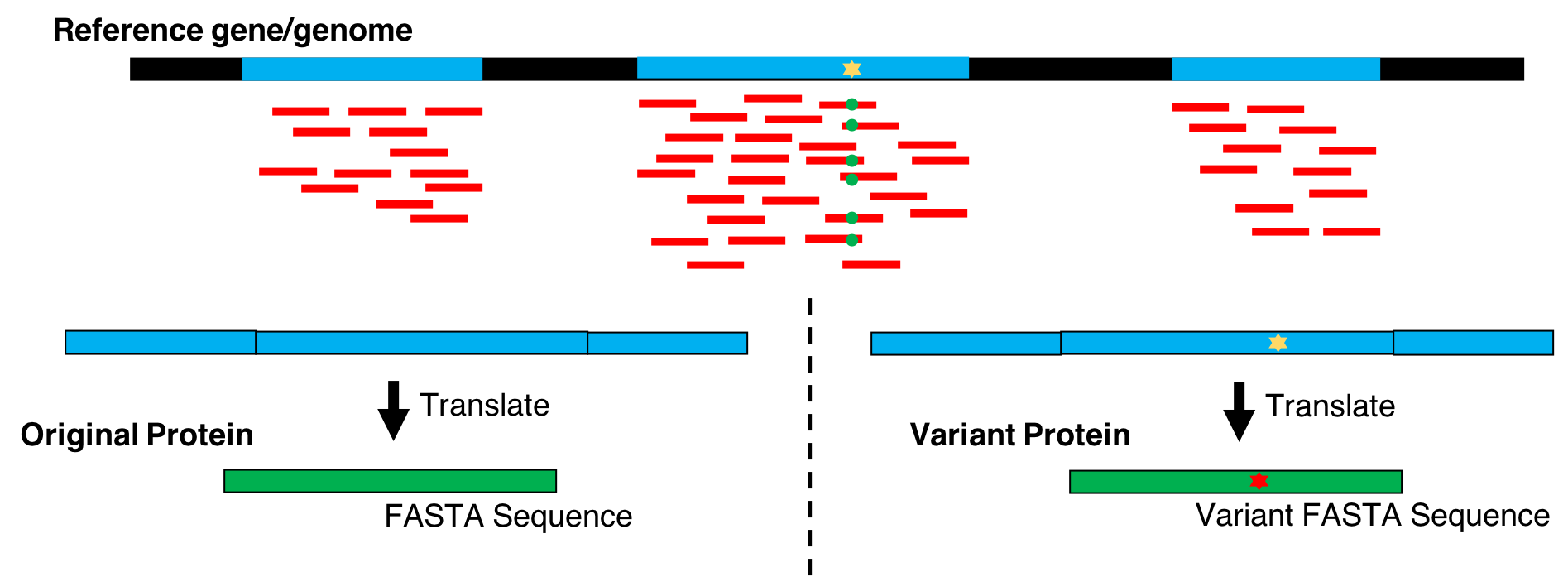

Proteogenomics involves the use of mass spectrometry (MS) based proteomics data against genomics and transcriptomics data to identify peptides and to understand protein-level evidence of gene expression. In the first section of the tutorial, we will create a protein database (FASTA) using RNA-sequencing files (FASTQ) and then perform sequence database searching using the resulting FASTA file with the MS data to identify peptides corresponding to novel proteoforms. Then, we will assign the genomic coordinates and annotations for these identified peptides and visualize the data for its spectral quality and genomic localization

Proteogenomics integrates RNA-Seq data for generating customized protein sequence databases with mass spectrometry-based proteomics data, which are matched to these databases to identify protein sequence variants. (Chambers et al. 2017).

AgendaIn this tutorial, we will cover:

Overview

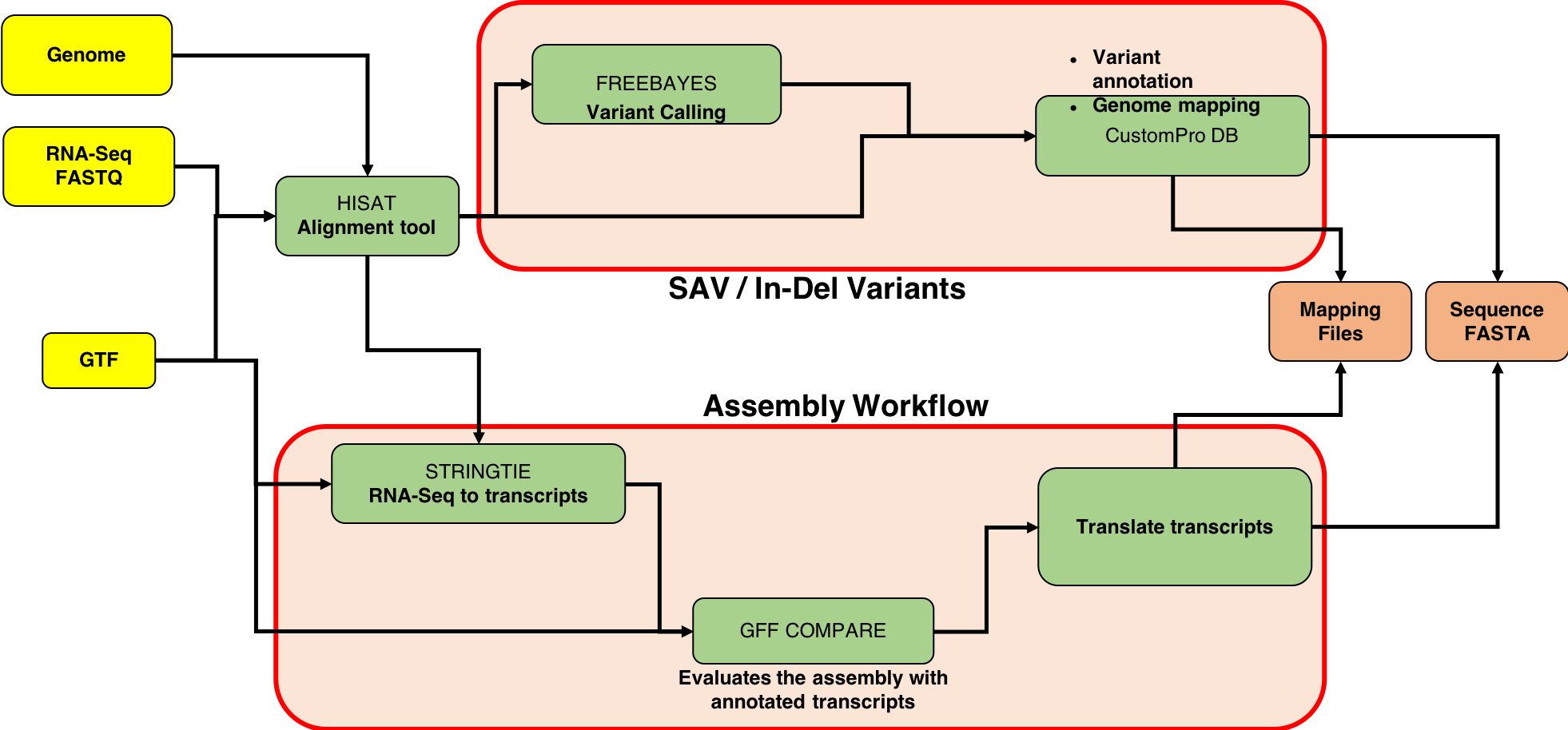

This tutorial focuses on creating a FASTA database generated from RNA-seq data. There are two outputs from this workflow: (1) a sequence database consisting of variants and known reference sequences and (2) mapping files containing genomic and variant mapping data.

The first part of the tutorial deals with creating the FASTA file containing sequences with single amino acid variants (SAVs), insertions and deletions (indels). The second part of the workflow helps in creating a FASTA file with transcript assemblies (splicing variants).

Data upload

In this tutorial, protein and the total RNA sample was obtained from the early development of B-cells from mice. It was obtained at two developmental stages of B-cells: Ebf1 -/- pre-pro-B and Rag2 -/- pro-B. Please refer to the original study Heydarian, M. et al.for details.

Hands On: data upload and organization

Create a new history and name it something meaningful (e.g. Proteogenomics DB creation)

To create a new history simply click the new-history icon at the top of the history panel:

- Import the Uniprot FASTA, FASTQ file and the GTF file from Zenodo

https://zenodo.org/records/1489208/files/Trimmed_ref_5000_uniprot_cRAP.fasta https://zenodo.org/record/1489208/files/FASTQ_ProB_22LIST.fastqsanger https://zenodo.org/record/1489208/files/Mus_musculus.GRCm38.86.gtf

- Copy the link location

Click galaxy-upload Upload at the top of the activity panel

- Select galaxy-wf-edit Paste/Fetch Data

Paste the link(s) into the text field

Press Start

- Close the window

Rename the datasets with more descriptive names (strip off the url prefixes)

- Click on the galaxy-pencil pencil icon for the dataset to edit its attributes

- In the central panel, change the Name field

- Click the Save button

Make sure that the datatype of file

FASTQ_ProB_22List.fastqsangeris set tofastqsanger

- Click on the galaxy-pencil pencil icon for the dataset to edit its attributes

- In the central panel, click galaxy-chart-select-data Datatypes tab on the top

- In the galaxy-chart-select-data Assign Datatype, select

fastqsangerfrom “New Type” dropdown

- Tip: you can start typing the datatype into the field to filter the dropdown menu

- Click the Save button

Make sure the Database/Build (dbkey) is set to

Mouse.Dec 2011 (GRCm38/mm10)(mm10)

- Click the desired dataset’s name to expand it.

Click on the “?” next to database indicator:

- In the central panel, change the Database/Build field

- Select your desired database key from the dropdown list:

Mouse.Dec 2011 (GRCm38/mm10)(mm10)- Click the Save button

- Note: If you are running the workflow taken from the GTN, then make sure the tool “Tabular-to-FASTA” (tool number 26) has Title Column labeled as “1” and Sequence Column as “2”.

Change the chromosome names in the Ensembl GTF to match a UCSC genome reference

UCSC prefaces chromosome names with chr while Ensembl does not. To perform this action, we use the Replace Text in a specific column - a regular expression tool.

Hands On: Replace Text in a specific column

- Replace Text in a specific column ( Galaxy version 9.5+galaxy0) with the following parameters:

- param-file “File to process”:

Mus_musculus.GRCm38.86.gtf- Change numbered chromosome names

- “in column”:

Column: 1- “Find pattern”:

^([1-9][0-9]*)$- “Replace with:

chr\\1- param-repeat Insert Replacement to add another replacement option to change XY chromosomes

- “in column”:

Column: 1- “Find pattern”:

^([XY])$- “Replace with:

chr\\1- param-repeat Insert Replacement to add another replacement option to change mitochondrial chromosome

- “in column”:

Column: 1- “Find pattern”:

^MT$- “Replace with:

chrM- Rename the output to

Mus_musculus.GRCm38.86.fixed.gtfComment: Note on chromosome name changesThis step is necessary to help identify and correct errors due to inputs having non-identical chromosome identifiers and/or different chromosome sequence content. Galaxy Support

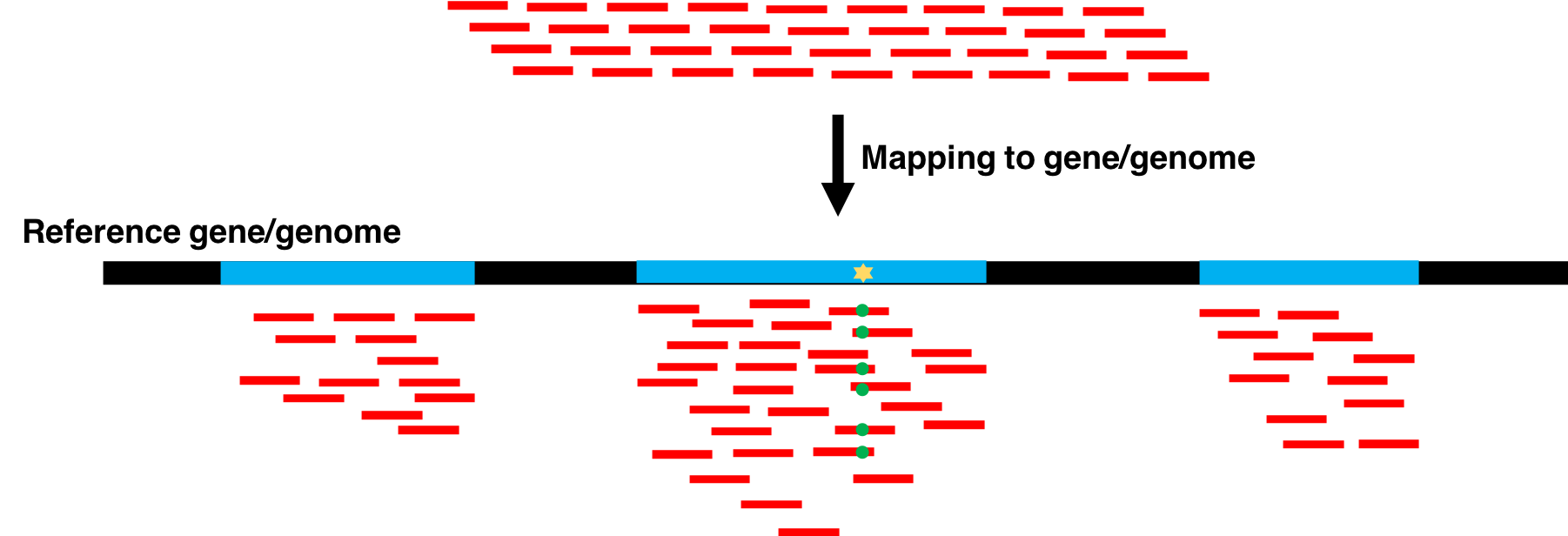

Aligning FASTQ files to the mouse genome

The first tool in the workflow is the HISAT2 alignment tool. It maps next-generation sequence (NGS) reads to the reference genome. This tool requires an RNA-seq file (.FASTQ) and a reference genome file in Gene transfer format (GTF). This .gtf file is obtained from the Ensembl database. When successful, this tool outputs a .bam file (binary version of a SAM: Sequence Alignment/Map).

Hands On: Alignment with HISAT2

- HISAT2 ( Galaxy version 2.2.1+galaxy1) with the following parameters:

- “Source for the reference genome”:

Use a built-in genome- “Select a reference genome”:

Mouse (Mus Musculus): mm10- “Single-end or paired-end reads”:

Single end- param-file “Input FASTQ files”:

FASTQ_ProB_22LIST.fastqsanger- “Specify strand information”:

Unstranded- Inspect galaxy-eye the resulting files

- Rename the output to

HISAT_Output.BAMComment: Note on strandednessNote that if your reads are from a stranded library, you need to choose the appropriate setting for “Specify strand information” mentioned above. For single-end reads, use

ForR.Fmeans that a read corresponds to a forward transcript.Rmeans that a read corresponds to the reverse complemented counterpart of a transcript.For paired-end reads, use either

FRorRF. With this option being used, every read alignment will have an XS attribute tag:+means a read belongs to a transcript on the positive+strand of the genome.-means a read belongs to a transcript on the negative-strand of the genome. (TopHat has a similar option,--library--typeoption, where fr - first strand corresponds to R and RF; fr - second strand corresponds to F and FR.)

Variant Analysis

Variant Calling

FreeBayes is a Bayesian genetic variant detector designed to find small polymorphisms, specifically SNPs (single-nucleotide polymorphisms), indels (insertions and deletions), MNPs (multi-nucleotide polymorphisms), and complex events (composite insertion and substitution events) smaller than the length of a short-read sequencing alignment.

Provided with some BAM dataset(s) and a reference sequence, FreeBayes will generate a VCF dataset describing SNPs, indels, and complex variants in samples in the input alignments. By default, FreeBayes will consider variants supported by at least two observations in a single sample (-C) and also by at least 20% of the reads from a single sample (-F). These settings are suitable for low to high depth sequencing in haploid and diploid samples, but users working with polyploid or pooled samples may adjust them depending on the characteristics of their sequencing data.

FreeBayes is capable of calling variant haplotypes shorter than a read length where multiple polymorphisms segregate on the same read. The maximum distance between polymorphisms phased in this way is determined by the --max-complex-gap, which defaults to 3bp. In practice, this can comfortably be set to half the read length. Ploidy may be set to any level (-p), but by default all samples are assumed to be diploid. FreeBayes can model per-sample and per-region variation in copy-number (-A) using a copy-number variation map.

FreeBayes can act as a frequency-based pooled caller and can describe variants and haplotypes in terms of observation frequency rather than called genotypes. To do so, use --pooled-continuous and set input filters to a suitable level. Allele observation counts will be described by AO and RO fields in the VCF output.

Hands On: Variant Calling with FreeBayes

- FreeBayes ( Galaxy version 1.3.9+galaxy0) with the following parameters:

- “Choose the source for the reference genome”:

Locally cached file

- “Run in batch mode?”:

Run Individually- param-file “BAM dataset::

HISAT_Output.BAM- “Using reference genome”:

Mouse (Mus Musculus): mm10- “Limit variant calling to a set of regions?”:

Do not Limit- “Choose parameter selection level”:

Simple diploid calling- Inspect galaxy-eye the resulting files.

Note: FreeBayes may take some time…be patient!!!!

Comment: FreeBayes optionsGalaxy allows five levels of control over FreeBayes options, provided by the Choose parameter selection level menu option. These are:

Simple diploid calling: The simplest possible FreeBayes application. Equivalent to using FreeBayes with only a BAM input and no other parameter options.

Simple diploid calling with filtering and coverage: Same as #1 plus two additional options: (1)

-0(standard filters:--min-mapping-quality 30 --min-base-quality 20 --min-supporting-allele -qsum 0 --genotype-variant-threshold 0) and (2)--min-coverage.Frequency-based pooled calling: This is equivalent to using FreeBayes with the following options:

--haplotype-length 0 --min-alternate-count 1 --min-alternate-fraction 0 --pooled -continuous --report- monomorphic. This is the best choice for calling variants in mixtures such as viral, bacterial, or organellar genomes.Frequency-based pooled calling with filtering and coverage: Same as #3 but adds

-0and--min-coveragelike in #2.Complete list of all options: Gives you full control by exposing all FreeBayes options as Galaxy parameters.

Variant annotation and Genome Mapping

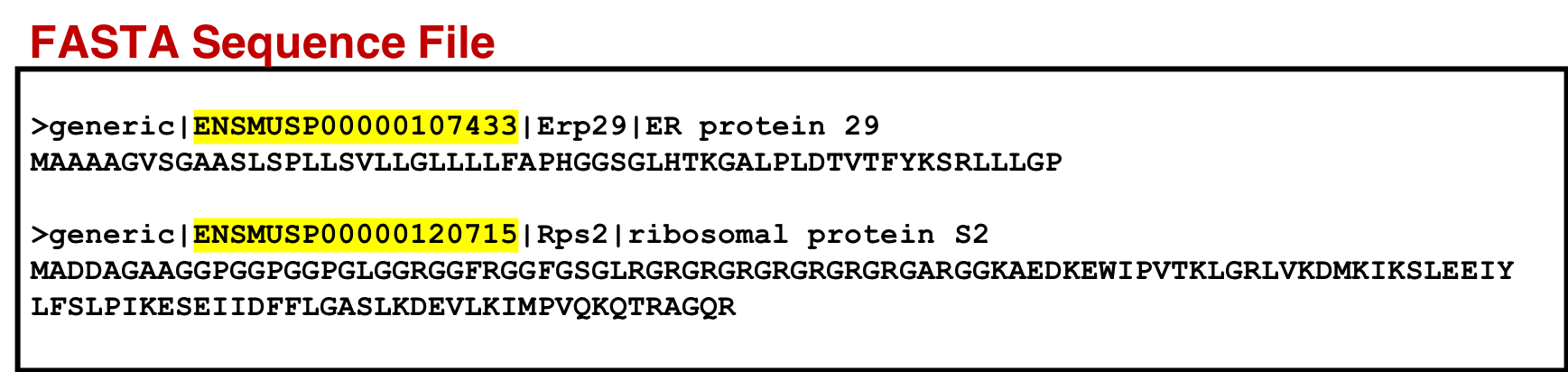

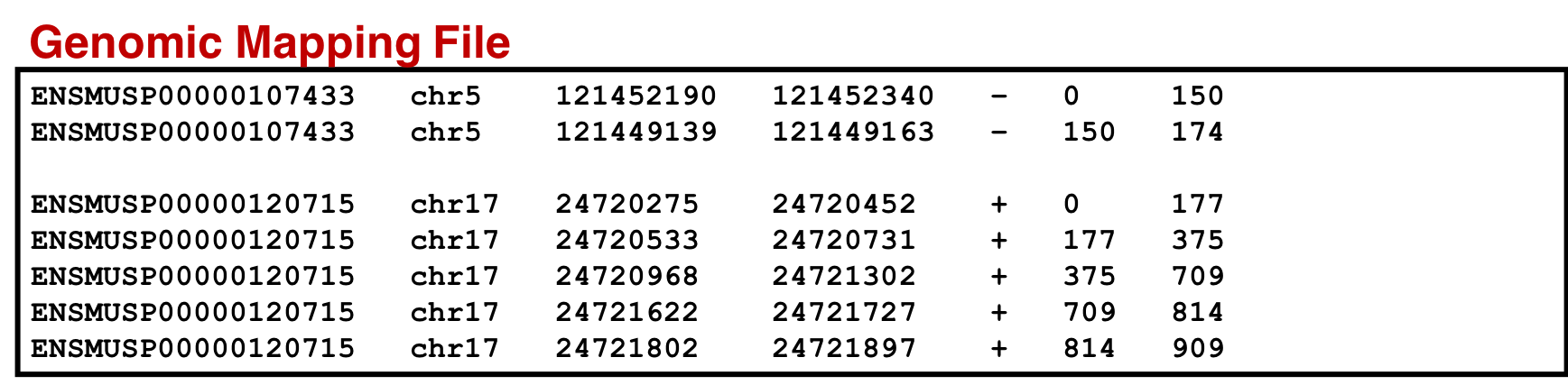

CustomProDB (Wang and Zhang 2013) generates custom protein FASTA sequences files from exosome or transcriptome data. Once Freebayes creates the .vcf file, CustomProDB uses this file to generate a custom protein FASTA file from the transcriptome data. For this tool, we use Ensembl 89 mmusculus (GRm38.p5) (dbsnp142) as the genome annotation. We create three FASTA files from CustomProDB: (1) a variant FASTA file for short indels, (2) a Single Amino acid Variant (SAV) FASTA file, an SQLite database file (genome mapping and variant mapping) for mapping proteins to a genome and (3) an RData file for variant protein coding sequences. The reference protein set can be filtered by transcript expression level (RPKM calculated from a BAM file), and variant protein forms can be predicted based on variant calls (SNPs and indels reported in a VCF file).

Annotations CustomProDB depends on a set of annotation files (in RData format) to create reference and variant protein sequences. Galaxy administrators can use the CustomProDB data manager to create these annotations to make them available for users.

Hands On: Generate protein FASTAs from exosome or transcriptome data

- CustomProDB ( Galaxy version 1.22.0) with the following parameters:

- “Will you select a genome annotation from your history or use a built-in annotation?”:

Use built in genome annotation

- “Using reference genome”:

Ensemble 89 mmusculus (GRm38.p5) (dbsnp142)- param-file “BAM file”:

HISAT_Output.BAM- param-file “VCF file”:

Freebayes.vcf- “Create a variant FASTA for short insertions and deletions”:

Yes- “Create SQLite files for mapping proteins to genome and summarizing variant proteins”:

Yes- “Create RData file of variant protein coding sequences”:

Yes- Inspect galaxy-eye the resulting files

There are 6 files are generated through the CustomProDB tool:

- rpkm.fasta: reference proteins filtered by transcript expression level (RPKM calculated from a BAM file)

- snv.fasta: Single Nucleotide Variants detected by FreeBayes (.vcf file)

- indel.fasta: Insertions/Deletions detected by FreeBayes (.vcf file)

- Genomic_mapping.sqlite: mapping proteins to genome

- Variant_annotation.sqlite: used to visualize the variants in the Multi-omics Visualization Platform

- RData: set of annotation files (in RData format) to create reference and variant protein sequences

For mapping proteins to genome and an RData file for variant protein coding sequences. Similar to the genomic mapping, a variant mapping file is also generated from CustomProDB. This SQLite file is also converted to tabular format and made SearchGUI-compatible. This variant annotation file will be used to visualize the variants in the Multi-omics Visualization Platform (in-house visualization platform developed by Galaxy-P senior developers).

Transcript Assembly

RNA-seq to transcripts

StringTie is a fast and highly efficient assembler of RNA-Seq alignments into potential transcripts. It uses a network flow algorithm as well as an optional de novo assembly step to assemble and quantitate full-length transcripts representing multiple splice variants for each gene locus.

Its input can include not only the alignments of raw reads used by other transcript assemblers, but also alignments of longer sequences that have been assembled from those reads. To identify differentially expressed genes between experiments, StringTie’s output can be processed by specialized software like Ballgown, Cuffdiff or other programs (DESeq2, edgeR, etc.).

Hands On: Transcript assembly with StringTie

- StringTie ( Galaxy version 2.2.3+galaxy0) with the following parameters:

- param-file “Input mapped reads”:

HISAT_Output.BAM- “Specify strand information”:

Unstranded- “Use a reference file to guide assembly?”:

Use Reference GTF/GFF3

- “Reference file”:

Use file from History- param-file “GTF/GFF3 dataset to guide assembly”:

Mus_musculus.GRCm38.86.fixed.gtf- Inspect galaxy-eye the resulting files.

Rename the output to

Stringtie_output.gtf

- Click on the galaxy-pencil pencil icon for the dataset to edit its attributes

- In the central panel, change the Name field

- Click the Save button

Make sure the datatype is

gtf

- Click on the galaxy-pencil pencil icon for the dataset to edit its attributes

- In the central panel, click galaxy-chart-select-data Datatypes tab on the top

- In the galaxy-chart-select-data Assign Datatype, select your desired datatype from “New Type” dropdown

- Tip: you can start typing the datatype into the field to filter the dropdown menu

- Click the Save button

StringTie accepts a BAM (or SAM) file of paired-end RNA-seq reads, which must be sorted by genomic location (coordinate position). This file contains spliced read alignments and can be produced directly by programs such as HISAT2. We recommend using HISAT2 as it is a fast and accurate alignment program. Every spliced read alignment (i.e. an alignment across at least one junction) in the input BAM file must contain the tag XS to indicate the genomic strand that produced the RNA from which the read was sequenced. Alignments produced by HISAT2 (when run with the --dta option) already include this tag, but if you use a different read mapper

you should check that this XS tag is included for spliced alignments.

Be sure to run HISAT2 with the

--dtaoption for alignment (under ‘Spliced alignment options’), for the best results.Also note that if your reads are from a stranded library, you need to choose the appropriate setting for ‘Specify strand information’ above. As, if Forward (FR) is selected, StringTie will assume the reads are from a

--frlibrary, while if Reverse (RF) is selected, StringTie will assume the reads are from a--rflibrary, otherwise it is assumed that the reads are from an unstranded library (the widely-used, although now deprecated, TopHat had a similar--library-typeoption, where fr-firststrand corresponded to RF and fr-secondstrand corresponded to FR). If you don’t know whether your reads are from a stranded library or not, you could use the tool ‘RSeQC Infer Experiment’ to try to determine.As an option, a reference annotation file in GTF/GFF3 format can be provided to StringTie. In this case, StringTie will prefer to use these “known” genes from the annotation file, and for the ones that are expressed it will compute coverage, TPM and FPKM values. It will also produce additional transcripts to account for RNA-seq data that aren’t covered by (or explained by) the annotation. Note that if option

-eis not used, the reference transcripts need to be fully covered by reads in order to be included in StringTie’s output. In that case, other transcripts assembled from the data by StringTie and not present in the reference file will be printed as well.We highly recommend that you provide annotation if you are analyzing a genome that is well annotated, such as human, mouse, or other model organisms.

Evaluate the assembly with annotated transcripts

GffCompare compares and evaluates the accuracy of RNA-Seq transcript assemblers (Cufflinks, Stringtie). * collapse (merge) duplicate transcripts from multiple GTF/GFF3 files (e.g. resulted from assembly of different samples) * classify transcripts from one or multiple GTF/GFF3 files as they relate to reference transcripts provided in a annotation file (also in GTF/GFF3 format)

The original form of this program is also distributed as part of the Cufflinks suite, under the name CuffCompare. Most of the options and parameters of CuffCompare are supported by GffCompare, while new features will likely be added to GffCompare in the future.

Hands On: compare assembled transcripts against a reference annotation

- GffCompare ( Galaxy version 0.12.6+galaxy0) with the following parameters:

- param-file “GTF inputs for comparison”

Stringtie_output.gtf- “Use Reference Annotation”:

yes

- “Choose the source for the reference annotation”:

History

- param-file “Reference Annotation”:

Mus_musculus.GRCm38.86.gtf- “Strict match”:

yes

A notable difference between GffCompare and CuffCompare is that when a single query GTF/GFF file is given as input along with a reference annotation (

-roption), GFFCompare switches into “annotation mode” and it generates a.annotated.gtffile instead of the.merged.gtfproduced by CuffCompare with the same parameters. This file has the same general format as CuffCompare’s .merged.gtf file (with “class codes” assigned to transcripts as per their relationship with the matching/overlapping reference, transcript) but the original transcript IDs are preserved, so GffCompare can thus be used as a simple way of annotating a set of transcripts.Another important difference is that the input transcripts are no longer discarded when they are found to be “intron redundant”, i.e. contained within other, longer isoforms. CuffCompare had the

-Goption to prevent collapsing of such intron redundant isoforms into their longer “containers”, but GffCompare has made this the default mode of operation (hence the-Goption is no longer needed and is simply ignored when given).

Translate transcripts

Hands On: Translate transcripts to protein sequenceFirst, we must convert the GffCompare annotated GTF file to BED format.

- Convert gffCompare annotated GTF to BED ( Galaxy version 0.2.1) with the following parameters:

- param-file “GTF annotated by gffCompare”:

output from gff compare- “filter GffCompare class_codes to convert”:

- param-check

j : Potentially novel isoform (fragment): at least one splice junction is shared with a reference transcript- param-check

e : Single exon transfrag overlapping a reference exon and at least 10 bp of a reference intron, indicating a possible pre-mRNA fragment.- param-check

i : A transfrag falling entirely within a reference intron- param-check

p : Possible polymerase run-on fragment (within 2Kbases of a reference transcript)- param-check

u : Unknown, intergenic transcriptNext, we translate transcripts from the input BED file into protein sequences.

- Translate BED transcripts cDNA in 3frames or CDS ( Galaxy version 0.1.0) with the following parameters:

- param-file “A BED file with 12 columns”:

Convert GffCompare-annotated GTF to BED- “Source for Genomic Sequence Data”:

Locally cached twobit- “Select reference 2bit file”:

mm10- “Fasta ID Options”

- “fasta ID source, e.g. generic”:

genericFinally, we convert a BED format file of the proteins from a proteomics search database into a tabular format for the Multiomics Visualization Platform (MVP).

- bed to protein map ( Galaxy version 0.2.0) with the following parameters:

- param-file “A BED file with 12 columns, thickStart and thickEnd define protein coding region”:

Translate cDNA_minus_CDS

Comment: Note on data formats

- The tabular output can be converted to a sqlite database using the Query_Tabular tool.

- The sqlite table should be named: feature_cds_map.

- The names for the columns should be: name, chrom, start, end, strand, cds_start, cds_end

- This SQL query will return the genomic location for a peptide sequence in a protein (multiply the amino acid position by 3 for the cds location)

Creating FASTA Databases

In this section we will perform the following tasks:

- The Protein Database Downloader tool is used to download the FASTA database from UniProt and cRAP (common Repository of Adventitious Proteins) database containing known/reference mouse proteins. For the purpose of this tutorial, we will use the

Reference_5000_uniprot_cRAP.fastafile as this database. - The FASTA Merge Files and Filter Unique Sequences tool is used to merge the databases obtained from CustomProDB and “Translate BED” tool along with the UniProt and cRAP databases.

- The Regex Text Manipulation tool is used to manipulate the FASTA file to make it SearchGUI-compatible.

Hands On

- FASTA Merge Files and Filter Unique Sequences ( Galaxy version 1.2.0) with the following parameters:

- “Run in batch mode?”:

Merge individual FASTAs (output collection if input is collection)- Click param-repeat “Insert Input FASTA File(s)” three times so that there are a total of three “Input FASTA File(s)” blocks.

- Use the following parameters:

- param-file “Input FASTA File(s)” :

1.HISAT2_Output.rpkm 2.HISAT2_Output.snv 3.HISAT2_Output.indel- “How are sequences judged to be unique?”:

Accession and Sequence- “Accession Parsing Regular Expression”:

^>([^ ]+).*$- Rename the output

Merged and Filtered FASTA from CustomProDB

Comment: Tool parameters explainedThis tool concatenates FASTA database files together.

- If the uniqueness criterion is “Accession and Sequence”, only the first appearence of each unique sequence will appear in the output. Otherwise, duplicate sequences are allowed, but only the first appearance of each accession will appear in the output.

- The default accession parser will treat everything in the header before the first space as the accession.

Comment: CustomProDB generated protein Accession names need to be changed for PeptideShakerPeptideShaker does not allow greaterthan sign, comma, or spaces in the protein accession name. PeptideShaker also requires the FASTA ID to start with a source, e.g. >sp|Q8C4J7| for SwissProt entries. Nonstandard entries must be prefixed generic|. We will also need to similarily modify the accessions in the genomic mapping and and variant annotation outputs. For eg: ENSEMBL-PRO (output from CustomProDB) and STRG (from StringTie) are not standard format.

Hands On: FASTA to Tabular

- FASTA-to-Tabular ( Galaxy version 1.1.1) with the default parameters

- param-file “Convert these sequences”:

Merged and Filtered FASTA from CustomProDB- Column Regex Find And Replace ( Galaxy version 1.0.3) with the following parameters:

- param-file “Select cells from”:

FASTA-to-Tabular outputfrom previous step- “Using”:

Column: 1- param-repeat Insert Check

- “Find Regex” :

^(ENS[^_]+_\d+:)([ACGTacgt]+)>([ACGTacgt]+)\s*- “Replacement”:

\1\2_\3- param-repeat Insert Check

- “Find Regex”:

,([A-Y]\d+[A-Y]?)\s*- “Replacement”:

.\1- param-repeat Insert Check

- “Find Regex”:

^(ENS[^ |]*)\s*- “Replacement”:

generic|\1- Tabular-to-FASTA ( Galaxy version 1.1.1) with the following parameters:

- param-file “Tab-delimited file”:

Column Regex Find And Replace Output- “Title column”:

Column: 1- “Sequence Column”:

Column: 2- Rename it as

CustomProDB Merged Fasta

Comment: FASTA-to-Tabular and Tabular-to-FASTA versionsPlease make sure the tool version is 1.1.1 Also, while running the workflow, the Title column for Tabular-to-FASTA might come empty, so please make sure you select Title Column as “1”.

For visualization purposes we also use the concatenate tool to concatenate the genomic mapping with the protein mapping dataset. This output will be used for visualization in MVP to view the genomic coordinates of the variant peptide.

We also need to modify the CustomProDB protein accessions the same as was done for the FASTA database.

An SQLite database containing the genomic mapping SQLite, variant annotation and information from the protein mapping file is concatenated to form a single genomic mapping SQLite database later used as an input for the ‘Peptide Genomic Coordinate’ tool. For that we need to follow the steps below:

Genomic mapping database

Hands On: Create Database for genomic mapping

- SQLite to tabular for SQL query ( Galaxy version 3.2.1) with the following parameters:

- param-file “SQLite Database”:

genomic_mapping.sqlitefrom CustomProDB- param-text “Query”:

SELECT pro_name, chr_name, cds_chr_start - 1, cds_chr_end,strand,cds_start - 1, cds_end FROM genomic_mapping ORDER BY pro_name, cds_start, cds_end- param-file “Omit column headers from tabular output”:

NoRename the output as

genomic_mapping_sqlite

- Click on the galaxy-pencil pencil icon for the dataset to edit its attributes

- In the central panel, change the Name field

- Click the Save button

The output is further processed so that the results are compatible with the Multiomics Visualization Platform. We need to modify the CustomProDB protein accessions the same as was done for the FASTA database.

- Column Regex Find And Replace ( Galaxy version 1.0.3) with the following parameters:

- param-file “Select cells from”:

genomic_mapping_sqlite' (tabular)- “Using”:

column 1- param-repeat Insert Check

- “Find Regex” :

^(ENS[^_]+_\d+:)([ACGTacgt]+)>([ACGTacgt]+)\s*- “Replacement”:

\1\2_\3- param-repeat Insert Check

- “Find Regex”:

,([A-Y]\d+[A-Y]?)\s*- “Replacement”:

.\1- param-repeat Insert Check

- “Find Regex”:

^(ENS[^ |]*)\s*- “Replacement”:

\1Rename the output as

SAV_INDELThis tool goes line by line through the specified input file and if the text in the selected column matches a specified regular expression pattern, it replaces the text with the specified replacement.

Next, we will concatenate the output from this tool with the “Bed to protein map” output.

- Concatenate multiple datasets ( Galaxy version 1.4.3) with the following parameters:

- param-files “Concatenate Datasets”: Select the output from the previous tool named as

SAV_INDELand the output from thebed to protein maptool and run the tool.- Rename the output as

Genomic_Protein_mapNow, we load this tabular datasets into an SQLite database.

- Query Tabular using SQLite ( Galaxy version 3.3.2) with the following parameters:

- param-repeat Insert Database Table

- param-file “Tabular Dataset for Table”:

Genomic_Protein_map- Section Table Options:

- “Specify Name for Table”:

feature_cds_map- “Specify Column Names (comma-separated list)”:

name,chrom,start,end,strand,cds_start,cds_end- param-repeat Insert Table Index:

- “This is a unique index”:

No- “Index on columns”:

name,cds_start,cds_end- “Save the sqlite database in your history”:

YesRename the output as

genomic_mapping_sqlitedb

Variant Annotations database

We will repeat the process for the variant annotations (this file will be used as a input to the new updates that will be made on the Multiomics Visualization platform). We need to modify the CustomProDB protein accessions the same as was done for the FASTA database.

Hands On: Create database for variant annotations

- SQLite to tabular for SQL query ( Galaxy version 3.2.1) with the following parameters:

- param-file “SQLite Database”:

variant_annotations.sqlitefrom CustomProDB- “Query”:

SELECT var_pro_name,pro_name,cigar,annotation FROM variant_annotationRename the output as

variant_annotation_sqliteWe will subject the output to text manipulation so that the results are compatible with the Multiomics Visualization Platform.

- Column Regex Find And Replace ( Galaxy version 1.0.3) with the following parameters:

- param-file “Select cells from”:

variant_annotations_sqlite' (tabular)- “Using:”

Column: 1- param-repeat Insert Check

- “Find Regex”:

^(ENS[^_]+_\d+:)([ACGTacgt]+)>([ACGTacgt]+)\s*- “Replacement”:

\1\2_\3- param-repeat Insert Check:

- “Find Regex”:

,([A-Y]\d+[A-Y]?)\s*- “Replacement”:

.\1- param-repeat Insert Check:

- “Find Regex”:

^(ENS[^ |]*)\s*- “Replacement”:

\1Rename the output as

variant_annotationNext, we will load this tabular dataset into an SQLite database.

- Query Tabular using SQLite ( Galaxy version 3.3.2) with the following parameters:

- param-repeat Insert Database Table

- param-file “Tabular Dataset for Table”:

variant_annotation- Section Table Options:

- “Specify Name for Table”:

variant_annotation- “Specify Column Names (comma-separated list)”:

name,reference,cigar,annotation- param-repeat Insert Table Index

- “This is a unique index”:

No- “Index on columns”:

name,cigar- “Save the sqlite database in your history”:

Yes- Rename the output as

Variant_annotation_sqlitedb

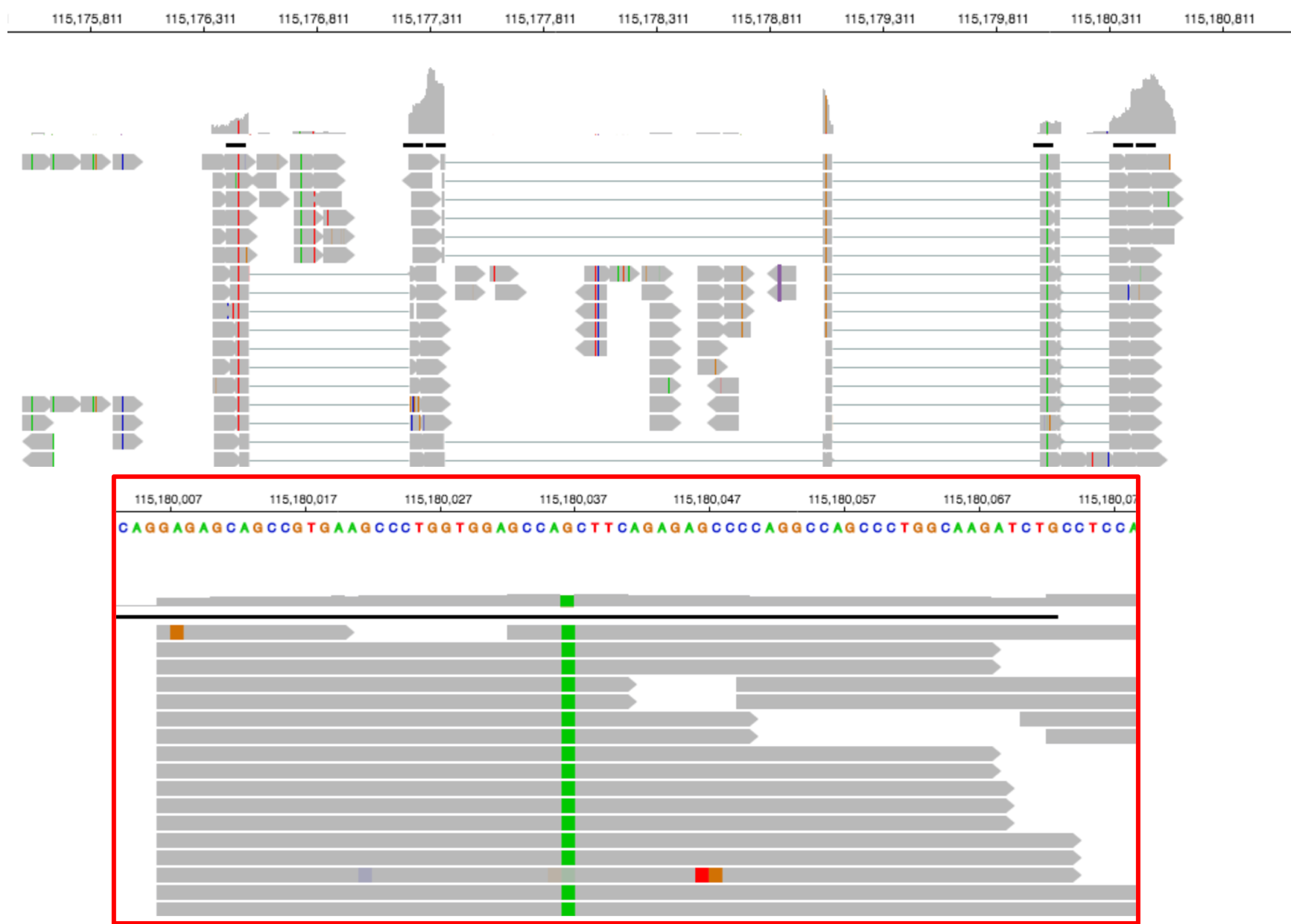

These SQLite databases, which contain the genomic mapping SQLite and variant annotation information from the protein mapping file, will be utilized by MVP to visualize the genomic loci of any variant peptides.

Finally, we can create a database which can be used to search Mass spectrometry (raw/mgf) files to identify peptides that match to this database.

To do so:

Hands On

- FASTA Merge Files and Filter Unique Sequences ( Galaxy version 1.2.0) with the following parameters:

- “Run in batch mode?”:

Merge individual FASTAs (output collection if input is collection)- Click param-repeat “Insert Input FASTA File(s)” three times so that there are a total of three “Input FASTA File(s)” blocks.

- param-files “Input FASTA File(s)” :

Custom ProDB Merged Fasta File output- param-files “Input FASTA File(s)” :

Translate BED transcripts Fasta output- param-files “Input FASTA File(s)” :

Reference_5000_uniprot_cRAP.fasta- “How are sequences judged to be unique?”:

Accession and Sequence- “Accession Parsing Regular Expression”:

^>([^ ]+).*$

Comment: Tool parameters explainedThis tool concatenates FASTA database files together.

- If the uniqueness criterion is “Accession and Sequence”, only the first appearence of each unique sequence will appear in the output. Otherwise, duplicate sequences are allowed, but only the first appearance of each accession will appear in the output.

- The default accession parser will treat everything in the header before the first space as the accession.

List of Reference Protein Accession names

Generate a list of Reference Proteins. Identify peptides that are contained in the reference proteins will be filtered out in the next tutorial.

Hands On: FASTA to Tabular

- FASTA-to-Tabular ( Galaxy version 1.1.1) with the default parameters:

- param-file “Convert these sequences”:

HISAT_Output.rpkm- Filter Tabular ( Galaxy version 3.3.1) with these filters:

- param-file “Tabular Dataset to filter”: output of

FASTA-to-Tabularon HISAT_Output.rpkm- param-repeat Insert Filter Tabular Input Lines

- “Filter by”:

select columns- “enter column numbers to keep”:

1- param-repeat Insert Filter Tabular Input Lines

- “Filter by”:

regex replace value in column- “enter column number to replace”:

1- “regex pattern”:

^([^ |]+).*$- “replacement expression”:

\1- FASTA-to-Tabular ( Galaxy version 1.1.1) with the default parameters:

- param-file “Convert these sequences”:

Trimmed_ref_5000_uniprot_cRAP.fasta- Filter Tabular ( Galaxy version 3.3.1) with these filters:

- param-file “Tabular Dataset to filter”: output of

FASTA-to-Tabularon Trimmed_ref_5000_uniprot_cRAP.fasta- param-repeat Insert Filter Tabular Input Lines

- “Filter by”:

select columns- “enter column numbers to keep”:

1- param-repeat Insert Filter Tabular Input Lines

- “Filter by”:

regex replace value in column- “enter column number to replace”:

1- “regex pattern”:

^[^|]+[|]([^| ]+).*$- “replacement expression”:

\1- Concatenate multiple datasets ( Galaxy version 1.4.3) with the following parameters:

- param-files “Concatenate Datasets”: Select the output from the previous 2 “Filter Tabular” outputs.

Comment: FASTA-to-Tabular and Tabular-to-FASTA versionsPlease make sure the tool version is 1.1.1

Comment: Tool versions

- All the tools mentioned in this tutorial are subjected to change when the tool version is upgraded .

Conclusion

This completes the walkthrough of the proteogenomics database creation workflow. This tutorial is a guide to have a database and mapping files ready for Database searching and novel peptide analysis. Researchers can use this workflow with their data also, please note that the tool parameters, reference genomes and the workflow will be needed to be modified accordingly.

This workflow was developed by the Galaxy-P team at the University of Minnesota. For more information about Galaxy-P or our ongoing work, please visit us at galaxyp.org